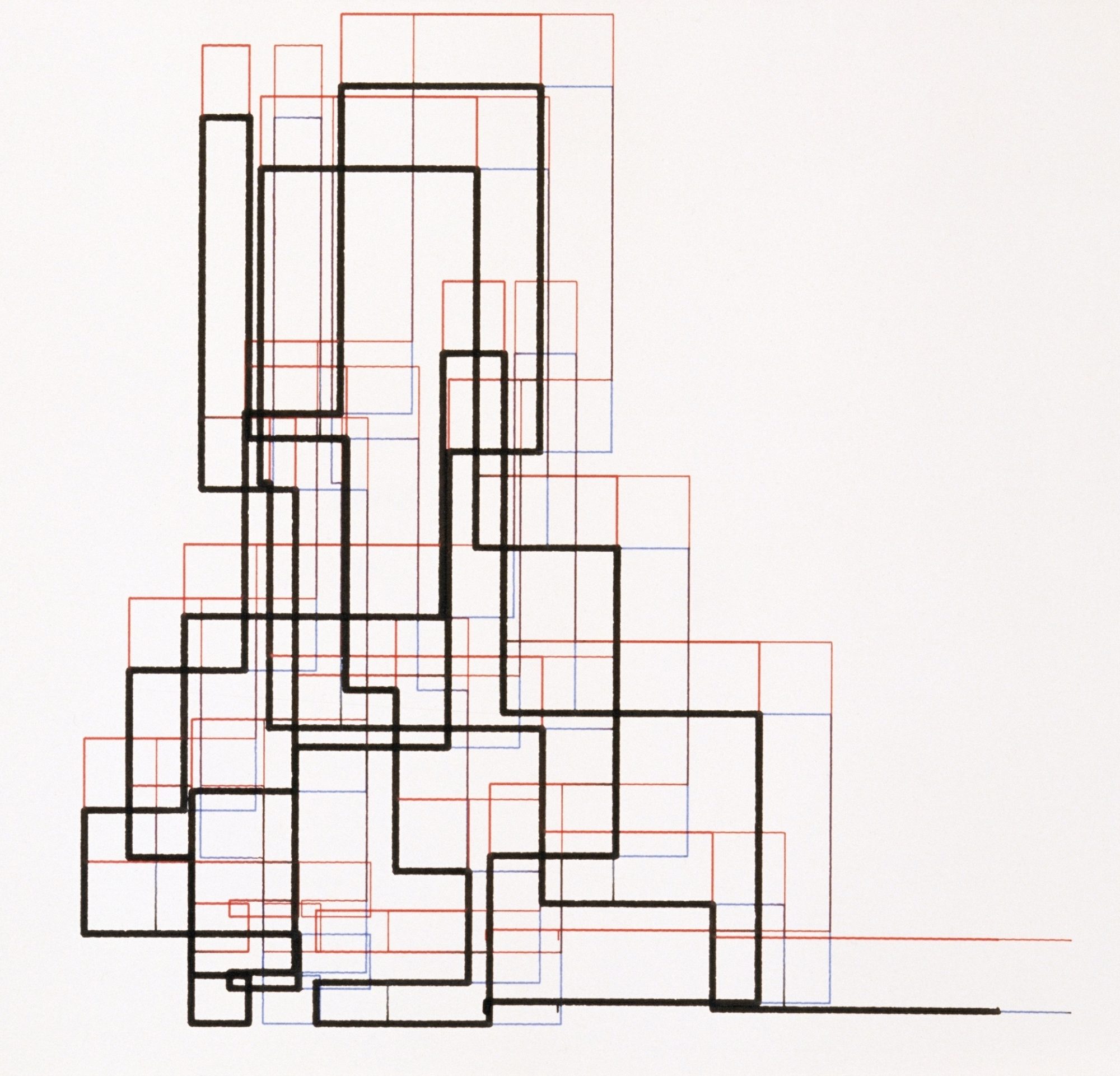

Frieder Nake Achsenparalleler Polygonzug (1965) photo credit: DAM projects GmbH.

Frieder Nake Achsenparalleler Polygonzug (1965) photo credit: DAM projects GmbH.

The power of computing comes from its idiosyncratic inflexibility. Every programmer will tell you that the computer does exactly what you tell it to - even when it’s not working as expected. But the power of the digital computer remains self-evident in spite of these limitations. There are two primary reasons:

- Algorithms: computers execute perfectly reproducible runsIgnoring rare and cosmic events like random bit flips.

- Data: computers can reliably transform information from one kind to another with perfect fidelity

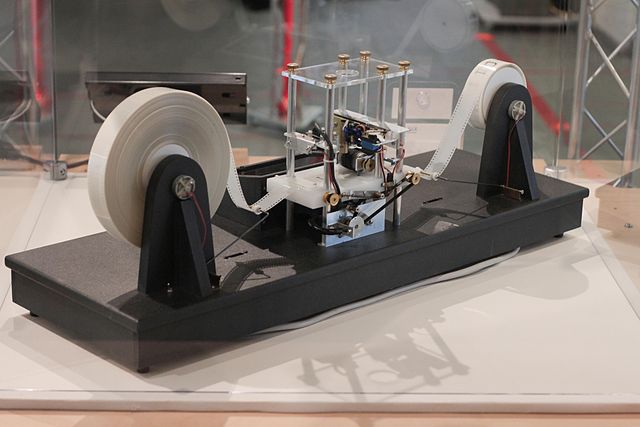

Theories of computation are mostly centered on the first issue. Alan Turing’s foundational 1927 paper focuses on how the Turing Machines execute algorithms. Even though the machines store their state as data, Turing’s emphasis is on how the instructions change the machine from one state to another. The sequence of symbols on a tape that run through Turing’s hypothetical machine are data. But the machine’s m-configuration (Turing’s term), which is set after every read from the tape, must also be stored. The outcome of reading the symbol off the tape is co-dependent on the m-configuration. But Turing spends little time describing the physical properties of m-configuration storage. They could be mechanical switches, electrons in silicon, or a configuration of neurons in the brain. The paper is rightfully focused on knowing whether or not an algorithm will terminate in advance of its execution. [Image: Mike Davey Turing Machine reconstruction as seen at Go Ask ALICE at Harvard University (2012) CC BY 3.0 Deed ]

The sequence of symbols on a tape that run through Turing’s hypothetical machine are data. But the machine’s m-configuration (Turing’s term), which is set after every read from the tape, must also be stored. The outcome of reading the symbol off the tape is co-dependent on the m-configuration. But Turing spends little time describing the physical properties of m-configuration storage. They could be mechanical switches, electrons in silicon, or a configuration of neurons in the brain. The paper is rightfully focused on knowing whether or not an algorithm will terminate in advance of its execution. [Image: Mike Davey Turing Machine reconstruction as seen at Go Ask ALICE at Harvard University (2012) CC BY 3.0 Deed ]

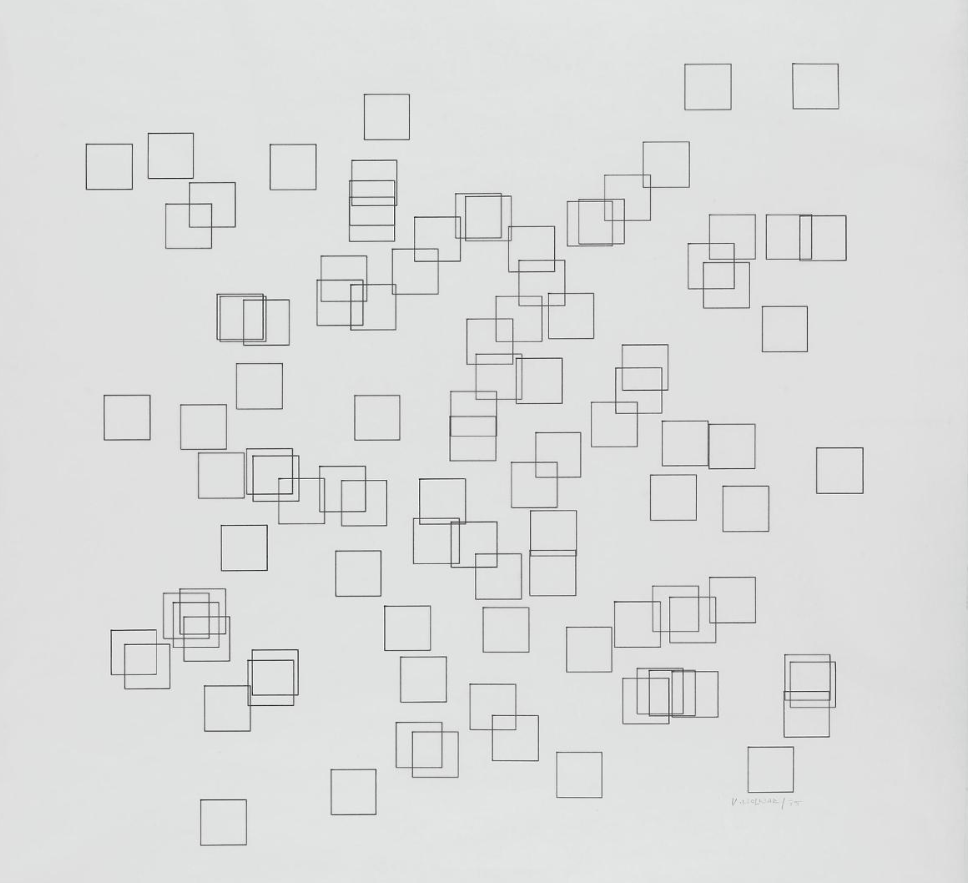

Algorithmic experimentation was also the focus of early computer art. Achsenparalleler Polygonzug, pictured above, was Nake’s expression of generative aesthetics. One of his contemporaries, Vera Molnár, Vera Molnár Déambulation entre ordre et chaos (1975) Adagp, Paris; photo credit: Georges Meguerditchian - Centre Pompidou

Vera Molnár Déambulation entre ordre et chaos (1975) Adagp, Paris; photo credit: Georges Meguerditchian - Centre Pompidou

even produced drawings using a method called imaginary machine (i.e. algorithms executed by hand) before she had access to a computer.

Adding a bit of randomness to the algorithm creates an infinite set of aesthetic variations. Generative artists like Nake and Molnár often curate from this point by selecting the best outcomes. This intuitive practice is still common today. But this type of artwork doesn’t leverage the second aspect of digital computers that make them so powerful: manipulating information with perfect fidelity.

When algorithms are combined with data, something empirical can be said about the information and how it was derived. Explicit connections can be made between bits of information or even between information as it changed over time. The connections may be inferences, causal, or even oblique. In any case, they are deterministic. Meaning it’s possible to know how and why the machine independently brought two pieces of information together.

Linking Ideas

The earliest forays into artificial intelligence by Allen Newell, Cliff Shaw, and Herbert A. Simon studied the way in which a machine could autonomously create inferences between statements. They created the Information Processing Language (IPL) in the mid-1950s to tackle this problem. The language’s flexibility laid the foundation to a collection of heuristics that became known as Logic Theorist - a software built to solve mathematical proofs.The JOHNNIAC computer, named after John von Neumann, ran Logic Theorist. The software successfully used heuristic methods to prove 38 of the first 52 theorems in Principia Mathematica, a significant feat considering the impracticality of trying every possible proof among trillions of options.

Not only was this the first time a non-human ever successfully performed such a task, Logic Theorist even came up with novel solutions and refinements to existing proofs.While undoubtedly a landmark in artificial intelligence and our general understanding of intelligence, Logic Theorist did not seem to impress when presented at the first ever Dartmouth Summer Research Project on Artificial Intelligence according to an account in Pamela McCorduck’s book, Machines Who Think.

They accomplished this feat by representing the rules of inference as elements in a list. More importantly, IPL allowed the researchers to use a flexible type of list called a linked list. This concept will come up again and again in everyday computer technology, so it’s important to understand why this simple structure enabled such a significant breakthrough. A typical representation of a list is a series of sequential items:

But the IPL team represented every item in the list as a linked element. This made it easy for the machine to traverse the list of inferences and modify each step in a mathematical proof by drawing a new arrow. It’s the difference between doing the work of pointing to the thing you want versus actually moving everything around so you can grab the thing you want.

Memory and processing cycles were scarce in early computing. So any optimization was a major victory. But it turns out that flexible links of information are more than just an implementation convenience. Early forays into artificial intelligence are just one point of evidence. Hypertext soon emerged as a way for people to create links in networks of information. This idea born of the 1960s was so profound that it would become the foundation of the World Wide Web decades later. As the man who named the concept, Ted Nelson, explains,

By “hypertext” I mean non-sequential writing – text that branches and allows choices to the reader, best read at an interactive screen. As popularly conceived, this is a series of text chunks connected by links which offer the reader different pathways.Ted Nelson, Literary Machines. (Swarthmore, PA: Self-published, 1981) via George P. Landow, Hypertext 3.0: Critical Theory and New Media in an Era of Globalization (Baltimore, MA: The Johns Hopkins University Press, 2006), 2-3.

Non-linear Editing

Linked information pervades the production and consumption of media today. Filmmaking was the last of the legacy media formats to be impacted by this sea change. Although the flexibility of digital filmmaking existed throughout the 1990s, 2003’s Cold Mountain

marks an inflection point. It was the first film of its scale to be edited on off-the-shelf Apple Macintosh G4s using software that cost under $1000. The editor, Walter Murch,Select Cuts by Walter Murch: “The Rain People” (1969), sound montage § “The Godfather” (1972), supervising sound editor § “American Graffiti” (1973), sound montage § “The Conversation” (1974), film and sound editing § “Julia” (1977), film editor § “Apocalypse Now” (1979), film editor, sound design; Oscar nomination for picture editing; Oscar for sound editing § “The Unbearable Lightness of Being” (1987), supervising film editor § “The English Patient” (1996), film editor; first editor ever awarded Oscars for film and sound editing in same picture § “Touch of Evil” (1998), restoration film editing and sound § “The Talented Mr. Ripley” (1999), editor § “Apocalypse Now Redux” (2001), film editor

was recognized with an Academy Award nomination, but it’s really his introspective process that provides the most lasting impact.

First, let’s set a few baseline number to understand what we’re talking about when a team edits a film like Cold Mountain. Murch estimatesApple, Inc. “Walter Murch: An Interview with the Editor of ‘Cold Mountain.’” Apple - Pro - Profiles - Walter Murch, p. 3. Accessed January 7, 2024.

that they

shot and printed 600,000 feet of film, which is about 113 hours of material. The film is 2 hours 30 minutes long, so that’s a 30 or 40 to 1 ratio. [The first cut] was over 5 hours long. So you find more inventive ways to compress the story to find out what can be eliminated that not only doesn’t affect the story, but actually, by its elimination, improves things by putting into juxtaposition things that formerly were not. It was a very complex orchestration, shrinking it by half.

Let’s first consider how someone had to cut 600,000 feet of film before the advent of digital tooling. A person had to rewind and fast forward through footage, catalog and mount reels of film, and physically splice celluloid in traditional film editing. If two clips were on the same reel, the process is relatively straight forward.

Note that moving from Clip A to Clip B is a physical act of moving through time - both the time of the film (usually in fast-forward) and the time of the editor.Such edits may be done on a Steenbeck, a popular 20th century film editing station.  R Freeman Steenbeck film editing machine (2007) CC BY-NC-SA 2.0 Deed

R Freeman Steenbeck film editing machine (2007) CC BY-NC-SA 2.0 Deed

Adding a clip from another reel means consulting a reference table, mounting the correct reel, and once again physically moving through time to arrive at the desired spot.

This act of moving through film strips sequentially, frame-by-frame, relented to digital non-linear editing as soon as computers became capable. These days editors like Murch can instantly jump to any moment within any footage captured and cataloged. But the name non-linear editing partially obfuscates the power of the technique. Films were already non-linear in some sense. Almost all contemporary films use edits to indicate some instantaneous leap in time. That leap may be to a parallel timeline, a timeline in the past, or a timeline in the future.

The significance of the digital technique is how trivial an edit can be made - dramatically lowering the cost of experimentation. Much like Logic Theorist a half-century earlier, Murch can easily try different routes to optimize a 5 hour long rough cut into a 2.5 hour long final cut.In fact, Newell and Simon’s work fueled hope that a new field of computational psychology would emerge. Logic Theorist and their subsequent General Problem Solver software allowed a person to observe the exact steps an autonomous being used to solve a problem. They hoped that these techniques would provide insights into how an artist made art, for example. As computer scientist Philip Agre observed in Computation and Human Experience, “In one of the papers in that volume Newell and Simon pursued the input-output matching view of computational theorizing, comparing the operation of the General Problem Solver (GPS) program step by step against the reports of an experimental subject. This was an extraordinary advance in psychological method: computer programming had made it imaginable to explain singular events, not just statistical averages over large populations.”

But 113 hours of film is a lot of data. Especially 20 years ago when Murch cut Cold Mountain on far less powerful machines than we have today. The trick is that the computer didn’t have to move footage around. It simply needed to link to files and add start and stop times as metadata. Here are the same three clips edited on a non-linear system:

This diagram looks more complex than the traditional edits above. But all this detail is simply structured data linked together - something that computers are extremely proficient at manipulating. A good user interface can turn this complexity into an elegant tool for people to use.

While he was working on hypertext in the 1960s, Ted Nelson “came to see that the issues I was facing for electronic documents were not algorithms but data structure.”Byron Reese, “50 Years Ago Today the Word ‘Hypertext’ Was Introduced,” GigaOm, August 24, 2015.

So let’s dive into the linked data structure and see what makes it so effective.

Linked Lists

The easiest way to demonstrate the power of linked list is to “edit” an element in memory. As we saw earlier, it’s trivial to arrange sequential elements in memory. But what happens if we want to simply take the thing at the end of the list and put it in the beginning? It’s at least three edits if we want to keep the order of the sequence.

The first edit is holding that G somewhere while we do the work of moving everything down one space. Then each element must be moved to make room for the next element. And finally we can place the G where we want it, at the beginning of the list.

Here’s where the flexibility of linking information should become self-evident. Here’s our original list:

And here is the updated list. G now links to A and F now terminates as the end of the list.

It’s simply less work.There are trade-offs to all this, of course. But this isn’t an introduction to computer science. It’s simply meant to show how a simple idea in computer science has propagated across all media, both new (hypertext on the World Wide Web) and old (filmmaking).

Less work and more flexibility affords experimentation for both an early 1950s computer and a seasoned film editor. Murch later reflected,Apple, Inc. “Walter Murch: An Interview with the Editor of ‘Cold Mountain.’” Apple - Pro - Profiles - Walter Murch, p. 3. Accessed January 7, 2024.

When you actually had to make the cut physically on film, you naturally tended to think more about what you were about to do. […] When I was in grade school they made us write our essays in ink for the same reason. Pencil was too easy to erase. The other “missing” advantage to linear editing was the natural integration of repeatedly scanning through rolls of film to get to a shot you wanted. Inevitably, before you ever got there, you found something that was better than what you had in mind. With random access, you immediately get what you want. Which may not be what you need.

Herein lies the drawback to all this instantaneous, linked data. It doesn’t easily provide context for our information. Especially if the arrow only points one way.

Going Beyond the Frame

Beyond the Frame was always a place for me to link together disparate ideas. The blogging medium works well because it is faster to publish than a book or a magazine article. And yet I have to refine my ideas because they do, indeed, get published.

My interests and priorities change over time. But the links between old ideas and new endeavors only deepen. The last time I mentioned Murch on Beyond the Frame was over 10 years ago in a completely different context. New perspectives provide fertile ground for new connections. That’s why I’m always thinking about how to improve the structure of information in this blog and refine its presentation. At one point, Beyond the Frame focused squarely on new media; computation was backgrounded. Back then the slogan was “composition, time dilation, and an opportunity for the sublime and the serendipitous.” But now I’d say that computation is the central theme and culture is the connective tissue.

At one point, Beyond the Frame focused squarely on new media; computation was backgrounded. Back then the slogan was “composition, time dilation, and an opportunity for the sublime and the serendipitous.” But now I’d say that computation is the central theme and culture is the connective tissue.

For instance, some readers may have noticed that the header design and logo

has changed for 2024.

Last year I contracted Torinese designer Alma Gianarro to come up with something that referenced the boxes and arrows of a linked list. When she showed me her initial concepts, I was surprised to see how much they reminded me of early computational art by Vera Molnár and Frieder Nake. So we took the theme and ran with it.

The connection to early digital art was unintentional. As mentioned earlier, this type of art relies heavily on algorithmic processes that don’t immediately reflect the themes I wanted to embody in the logo. But then I realized that Alma’s design surfaced the link between culture and technology that runs through my life and this blog. From computation to composition and from fractals to film - all disciplines intersect at some point.